HTTP Live Streaming

Two weeks ago I deployed my own HTTP Live Streaming (HLS) solution on Futureland. This experiment went fairly well. Don't get irritated by the name, especially the "Live Streaming" part. It does not mean that HLS exclusively can be used for live streaming purposes. As Wikipedia explains:

HTTP Live Streaming (also known as HLS) is an HTTP-based adaptive bitrate streaming communications protocol developed by Apple Inc. and released in 2009.

The most interesting part about this protocol is the "adaptive bitrate streaming" attribute. It is designed to adjust the quality of the media stream according to the user's bandwidth, CPU capacity and screen resolution. So no matter the device or location of a user, only the optimal version for the current situation gets downloaded. This results in almost no buffering, faster start times and better experience for both fast and slow internet connections.

Architecture

To provide a seamless HLS experience to our users we need the following components:

Encoder

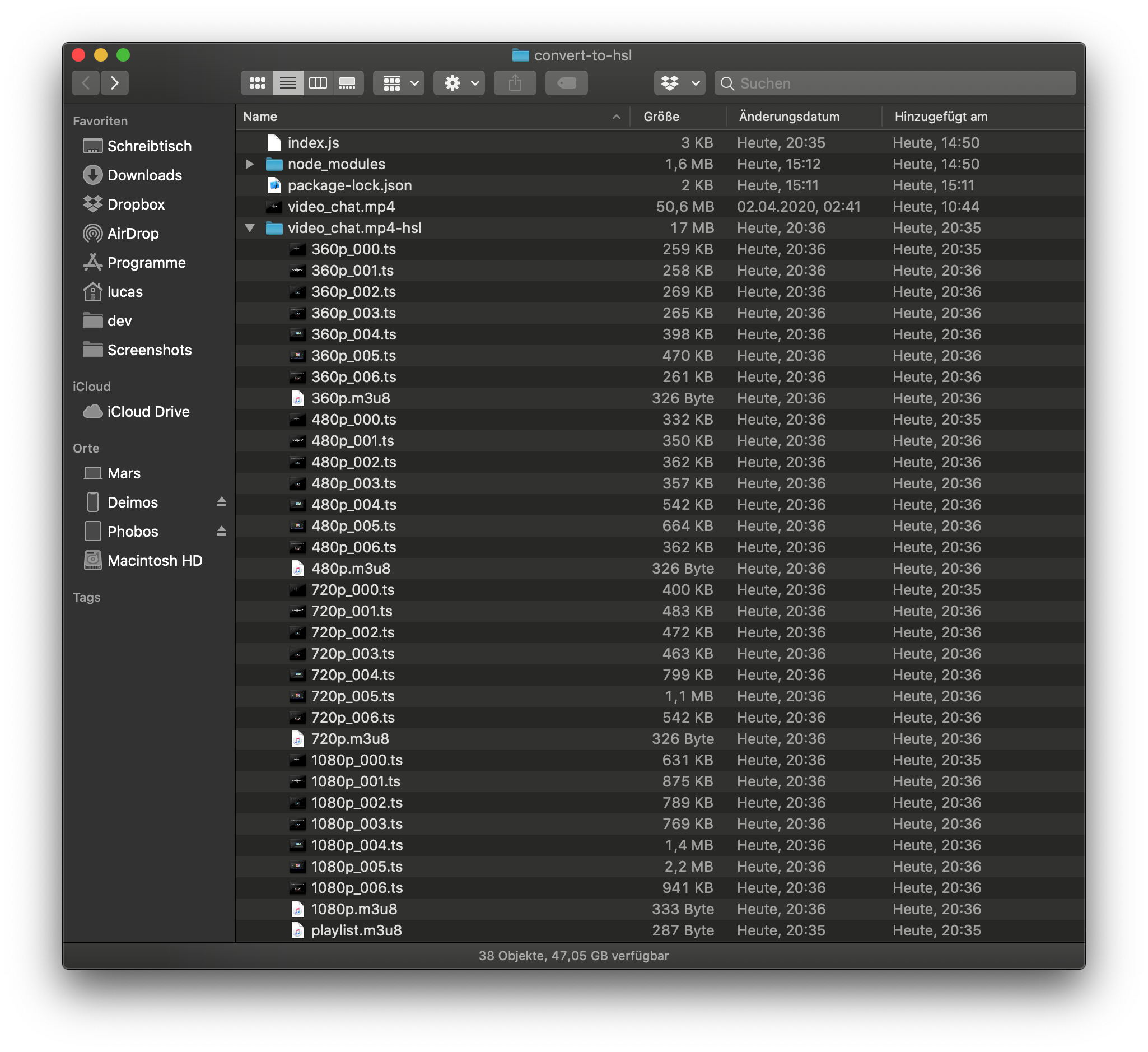

At first all the incoming high bit rate videos have to get processed to get segmented into smaller multi-second video parts (Futureland uses 10 seconds for each segment). This can for example happen by using the awesome FFMPEG. It will generate small video chunks of equal length for every bit rate and resolution in the already pretty magic H.264 format. Additional every chunk will be encapsulated by a MPEG-2 Transport stream and kept as .ts files. After that a .m3u8 manifest file gets generated to reference all fragmented files and their respective bite rates and resolutions.

In the case of Futureland we automatically create 360p, 480p, 720p and 1080p versions of every video that gets uploaded.

Server

After the encoder is done we already can start serving the newly generated files to clients. Basically we just need a HTTP server that hosts all created .m3u8 and .ts files. All of these files can be cached as they will not be mutated anymore.

Client

After all the files are served, clients can start requesting the .m3u8 manifest. The client uses this file to determine which version of the chunks to load. It usually starts by loading the stream with the lowest bit rates. If it finds that the network is fast enough to download a segment with a higher bitrate the next chunk will be requested with a higher bit rate. In case the clients notices a lower network throughput as before it will start requesting segments with a lower bit rate again. At the same time it will automatically assemble the sequence to allow uninterrupted playback for the user.

When to use it

HLS can be used for many different purposes. It is especially useful for building:

- A platform that supports user uploaded videos like Futureland or Twitter

- A video on demand service like Youtube or Netflix

- Video into a custom CMS

HLS is highly compatible and can be used in every modern browser with the help of the Media Source Extensions API. I would advise you to use hls.js to easily embed HLS videos on your site. On Futureland we additionally provide a high quality mp4 fallback in case a the Media Source Extension API is not available for some reason. This also has the advantage that we can offer to download the videos.

Conclusion

HLS is currently the best way to provide your users with adaptive bitrate streaming and give them a responsive experience no matter their internet connection or device. The only downside I can see right now is that encoding all videos into smaller .ts segments can be expensive, takes a lot of time and of course uses more disk space than a single .mp4 video.

In case you want to see how it works and feels in practice you can check out Futureland. HLS is enabled for all video outputs since 18th of May and will be rolled out to already uploaded videos soon.